Software Test Automation - - > The Functional checks

Can we increase our understanding and expectations of a system by combining various functional automation tests at different steps within the development lifecycle?

We look at how some of the fundamental disciplines of unit, integration, API, UI and infrastructure automation . And how a distributed (through the SDLC) while centralised (for dashboards, reporting, alerts) approach can lower the barrier to entry and provide faster feedback

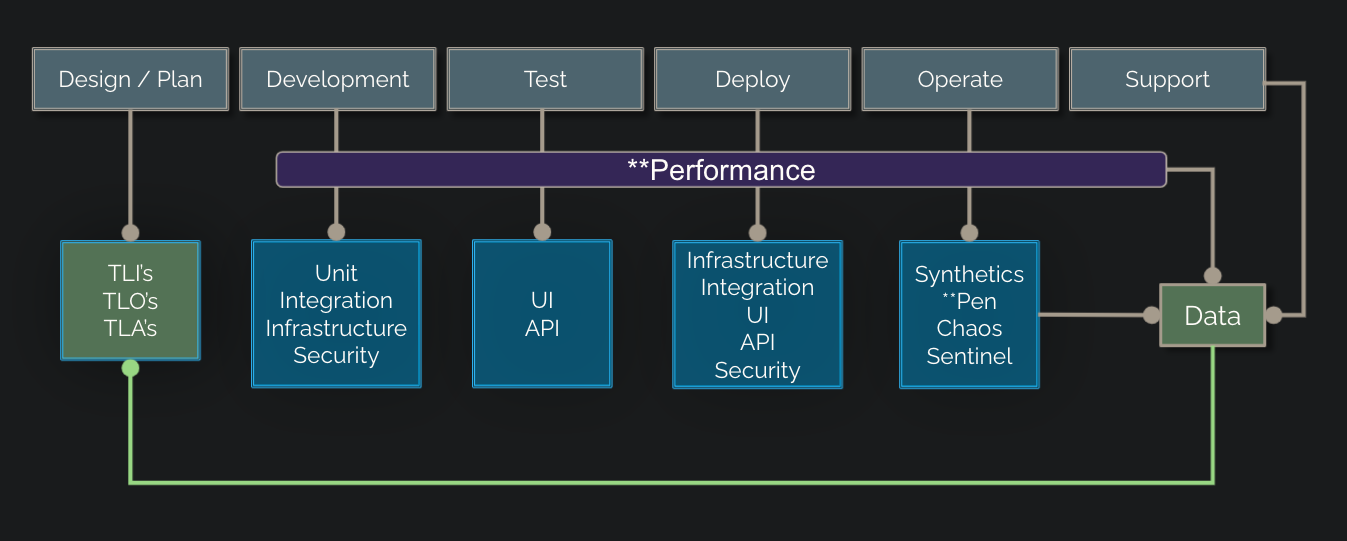

What might an ideal automation distrubution look like if we split a percentage of functional checks across each part of the SDLC ?

And if we had the opportunity to do run different automated tests across the development lifecycle might it look something like this

NOTE: Performance which runs across 4 cycles(Development,Test,Deploy,Operate) and Pen testing are not included given they are more Non functional focussed, Some more on performance Engineering you can check out the blog here

Let's take a look at each of the automated functional checks that we would usually implement to test the knows state of our application:

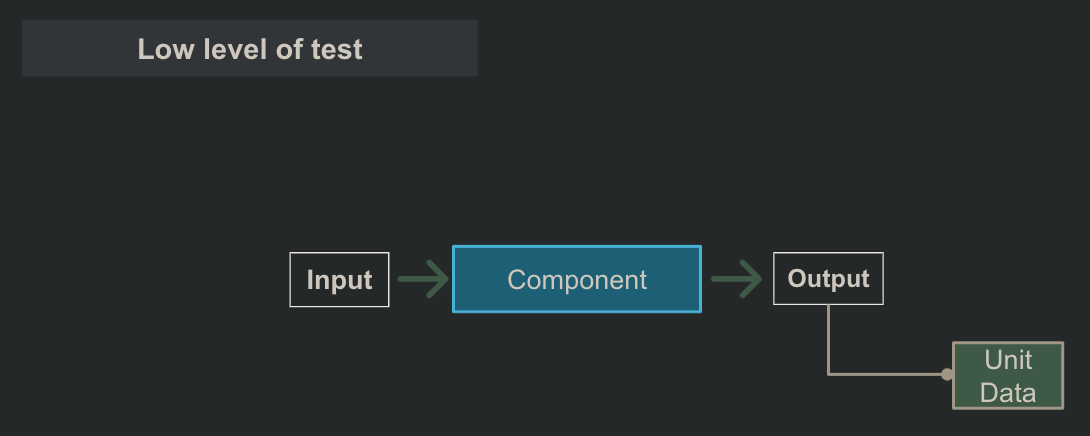

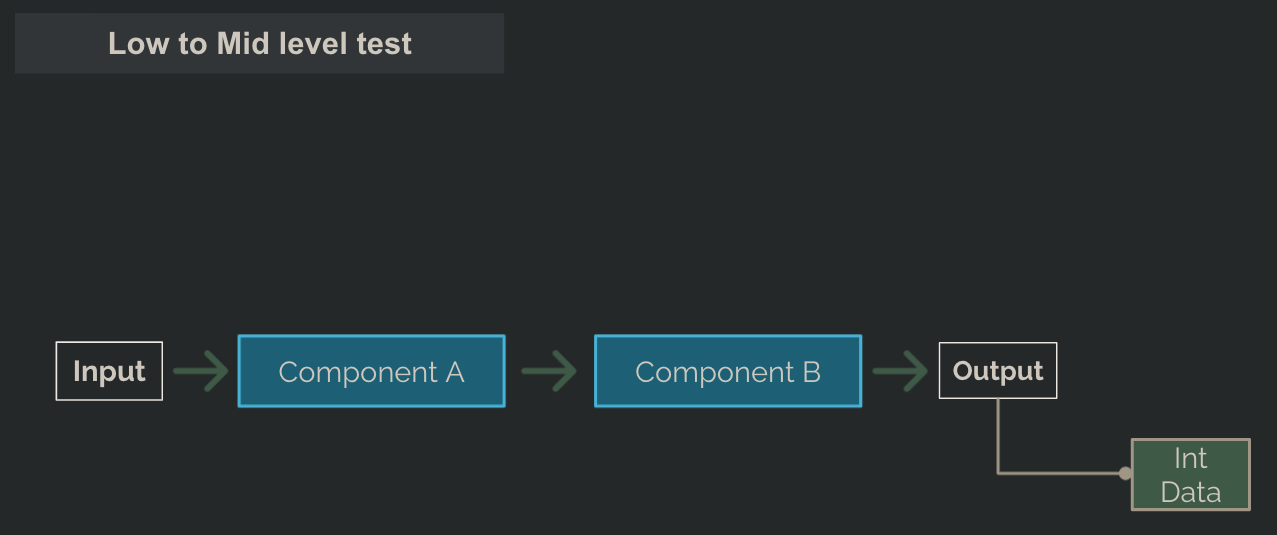

Tests are generally categorised into low, medium or high level > . Meaning, the higher the level the more complicated, expensive and longer it takes to execute, implement, troubleshoot and maintain

The unit test

A fast running test done against a method or function that looks to validate its behaviour. We give an input and expect a certain output

Due to their quick feedback they are ideal for running locally, in the CI pipeline and as a 1st line of defence in the CD pipeline

The Integration test

Used to confirm integration with other dependencies (apis, databases, messageHubs). They provide fast feedback, Useful to determine you are interacting correctly with the required dependencies

A lot of the time these are mocked for state verification and/or stubbed for characteristics verification, so you need to rely less on 3rd party services being available

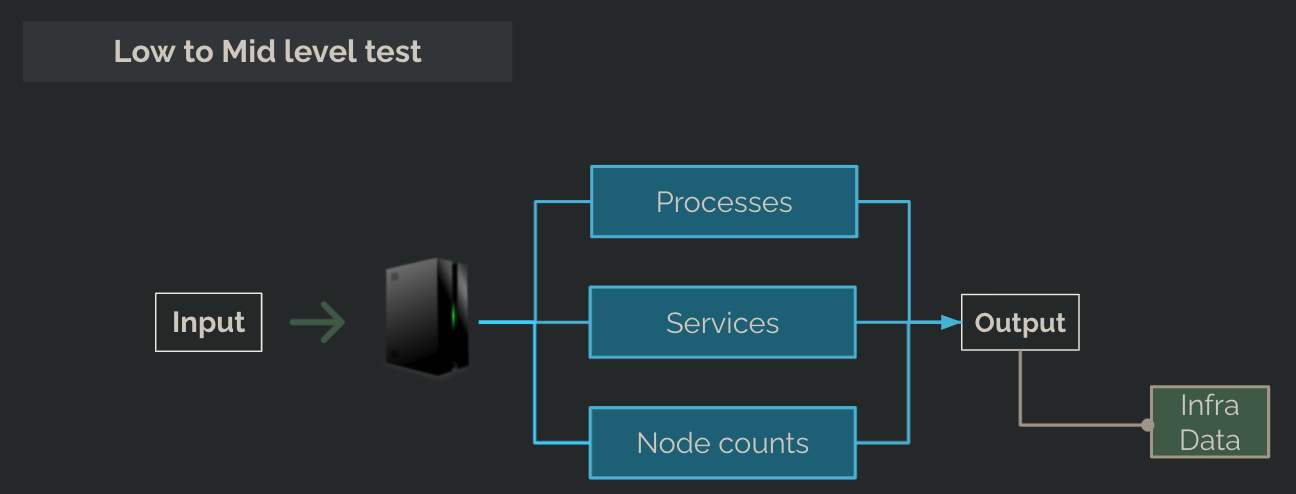

The Infrastructure test

Used to verify infrastructure behaviour and can include checks on directory permissions, running processes and services, open ports, node counts, storage accounts etc.

Handy to run these upon application deployment (VM's & Kubernetes) or when releasing a new SOE (standard operating environment)

These are often underutilised, and can help round off a well orchestrated automation approach

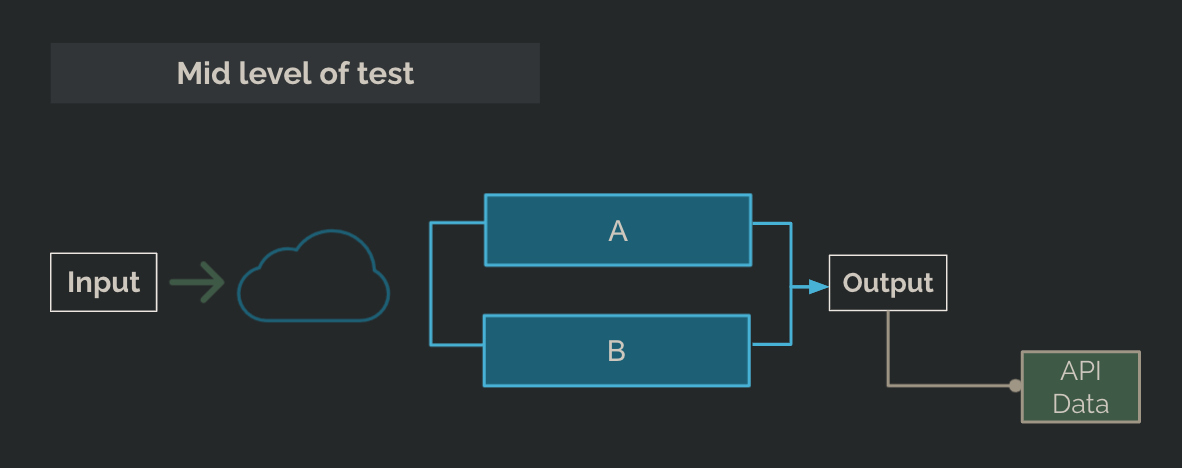

The API test

The API test often triggers off a sequence off actions. You send a request and expect a particular response code with the right payload

Usually can give you good feedback that a number of parts of the system are working as expected(APIs, Db's, Hubs, caches, load balancers)

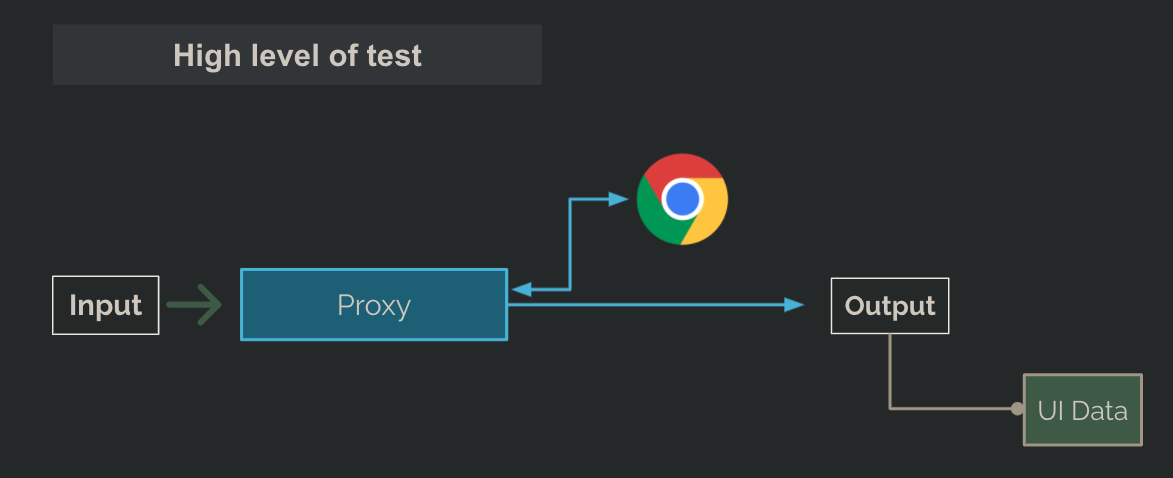

The UI test

The API test often triggers off a sequence off actions. You send a request and expect a particular response code with the right payload

Usually can give you good feedback that a number of parts of the system are working as expected(APIs, Db's, Hubs, caches, load balancers)

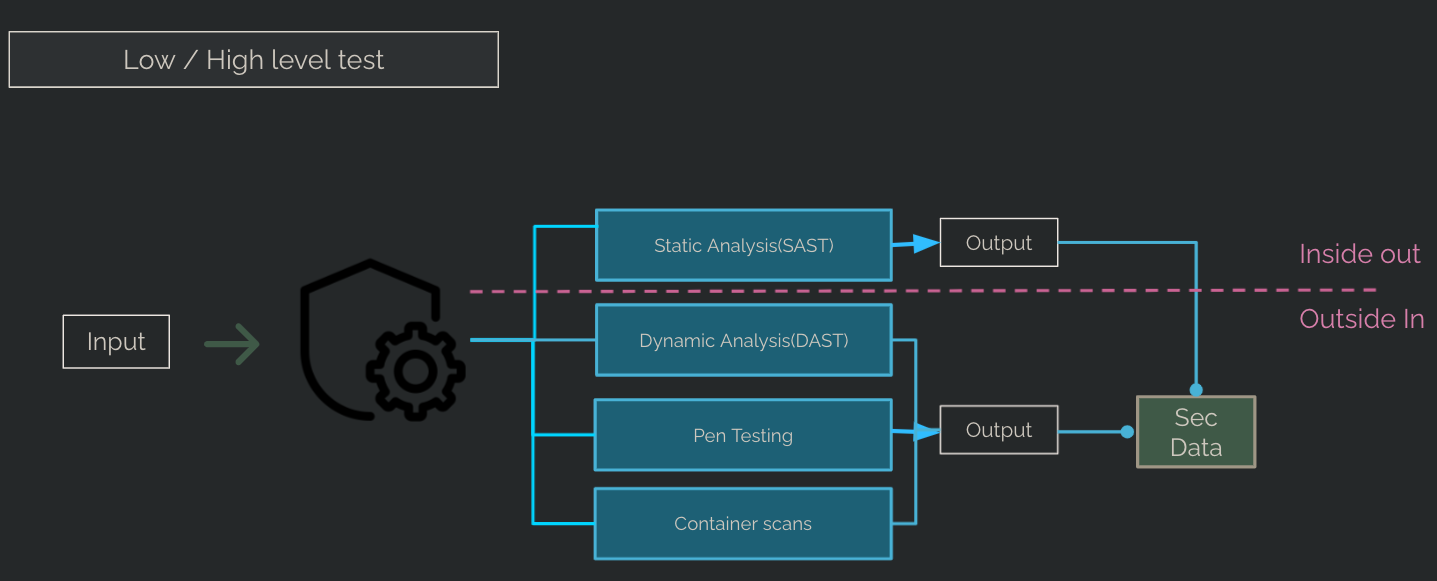

The Security test

A complex topic, however at a high level we want to know whether we have exposed ourself to vulnerabilities in our code, containers and infrastructure

Automation Observability

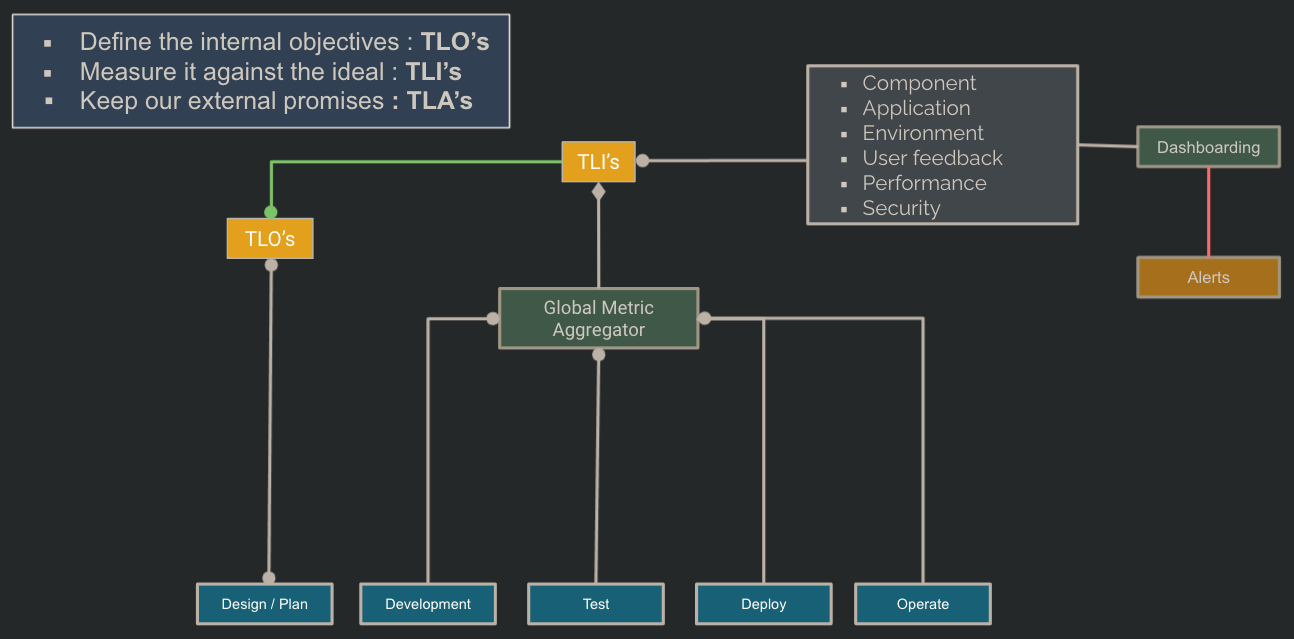

We want to understand how all of our different suites of automation are performing across all environments at any one time To do this we need to collate the data from each source and present that back as something useful, Such as a dashboard

We introduce TLO's (test level objectives), TLA's (test level agreements) and TLI's (test level indicators). Which are defined at design time to align with the team and business objectives.

They look to bring more clarity, accountability and transparency to the automation being executed. They also open communication channels and help to frame objectives

Summary

The goal being a way to distributed automation where tests execute at each stage of the development lifecycle And where its data is collated in a centralised manner and exposed though a series of dashboards

This leads to a more sustainable, resilient automation solution that detects problems early and these can then be fixed easier